What are CNNs, LLMs, Transformers, RNNs, and GANs and how do they relate to artificial intelligence (AI)?

CNNs (Convolution Neural Networks) are best for solving Computer Vision-related problems.

TRANSFORMERS are a type of neural network architecture that have been gaining popularity. Transformers were recently used by OpenAI in their language models, and also used recently by DeepMind for AlphaStar — their program to defeat a top professional Starcraft player.

LLMs (Large Language Models) are a specialized type of artificial intelligence (AI) that has been trained on vast amounts of text to understand existing content and generate original content.

RNNs (Recurrent Neural Networks) are proficient in Natural Language Processing

GANs (Generative Adversarial Networks) are an exciting recent innovation in machine learning. GANs are generative models: they create new data instances that resemble your training data. For example, GANs can create images that look like photographs of human faces, even though the faces don’t belong to any real person.

Recently Elad Gil posted an article with insight and history:

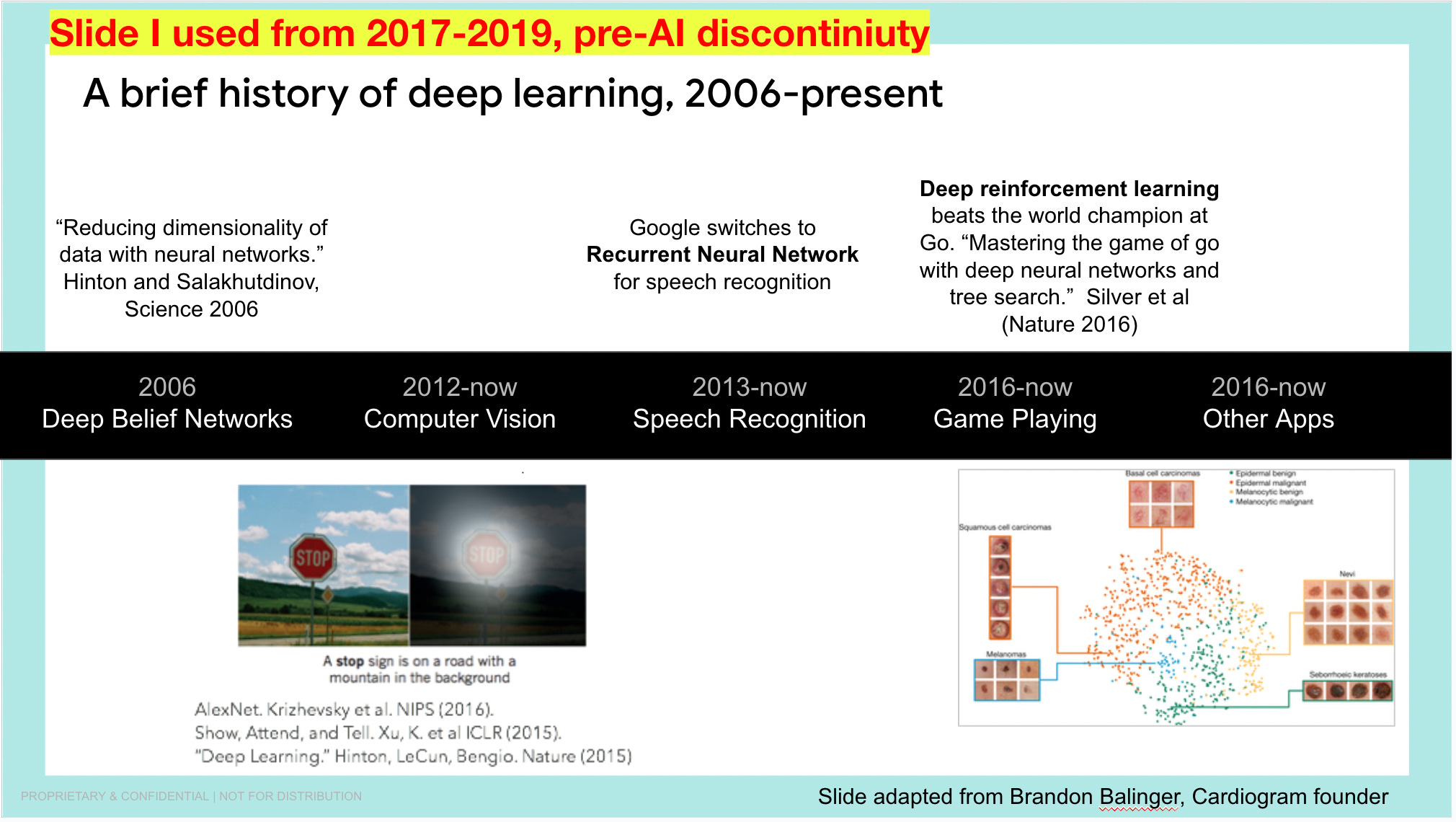

During 2017-2019 or so – this slide reflected the AI world

It is almost like cars existed, and someone invented an airplane and said “an airplane is just another kind of car – but with wings” – instead of mentioning all the new use cases and impact to travel, logistics, defense, and other areas. The era of aviation would have kicked off, not the “era of even faster cars”.